Model Performance

This section provides detailed performance metrics for the models available in TensorNets. The results were obtained by running the models on standard benchmark datasets.

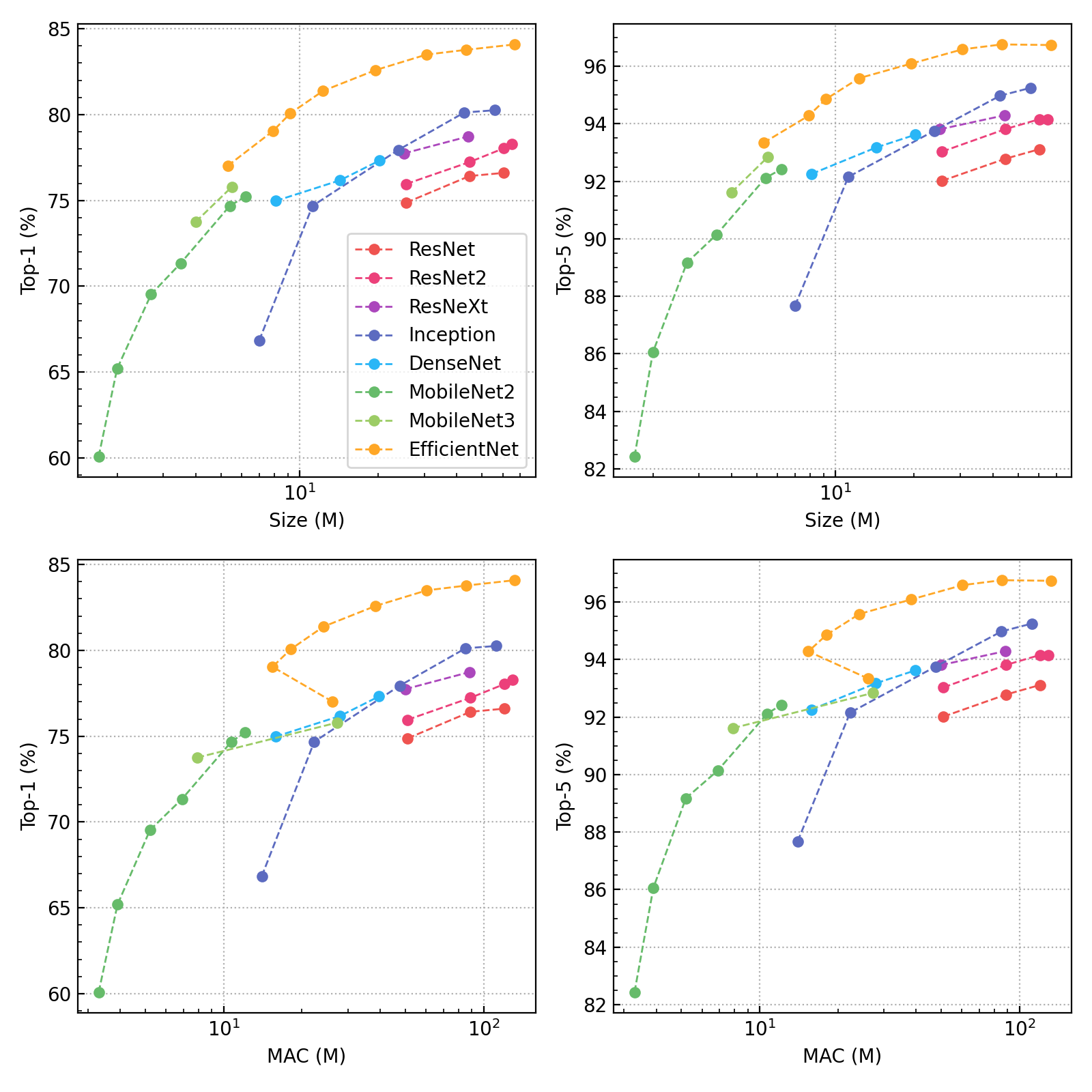

Image Classification

The following table summarizes the performance of image classification models on the ImageNet validation set.

- Input: The input image size (height/width) fed into the model.

- Top-1: Single center crop, top-1 accuracy.

- Top-5: Single center crop, top-5 accuracy.

- MAC: The number of Multiply-Accumulate operations, rounded (in Millions), measured with

tf.profiler. - Size: The total number of model parameters (in Millions), including fully-connected layers.

- Stem: The number of parameters in the convolutional base (in Millions), excluding fully-connected layers.

- Speed: Inference time in milliseconds for a batch of 100 images, measured on an NVIDIA Tesla P100 GPU.

| Input | Top-1 | Top-5 | MAC | Size | Stem | Speed | References | |

|---|---|---|---|---|---|---|---|---|

| ResNet50 | 224 | 74.874 | 92.018 | 51.0M | 25.6M | 23.6M | 195.4 | [paper] [tf-slim] [torch-fb] [caffe] [keras] |

| ResNet101 | 224 | 76.420 | 92.786 | 88.9M | 44.7M | 42.7M | 311.7 | [paper] [tf-slim] [torch-fb] [caffe] |

| ResNet152 | 224 | 76.604 | 93.118 | 120.1M | 60.4M | 58.4M | 439.1 | [paper] [tf-slim] [torch-fb] [caffe] |

| ResNet50v2 | 299 | 75.960 | 93.034 | 51.0M | 25.6M | 23.6M | 209.7 | [paper] [tf-slim] [torch-fb] |

| ResNet101v2 | 299 | 77.234 | 93.816 | 88.9M | 44.7M | 42.6M | 326.2 | [paper] [tf-slim] [torch-fb] |

| ResNet152v2 | 299 | 78.032 | 94.162 | 120.1M | 60.4M | 58.3M | 455.2 | [paper] [tf-slim] [torch-fb] |

| ResNet200v2 | 224 | 78.286 | 94.152 | 129.0M | 64.9M | 62.9M | 618.3 | [paper] [tf-slim] [torch-fb] |

| ResNeXt50c32 | 224 | 77.740 | 93.810 | 49.9M | 25.1M | 23.0M | 267.4 | [paper] [torch-fb] |

| ResNeXt101c32 | 224 | 78.730 | 94.294 | 88.1M | 44.3M | 42.3M | 427.9 | [paper] [torch-fb] |

| ResNeXt101c64 | 224 | 79.494 | 94.592 | 0.0M | 83.7M | 81.6M | 877.8 | [paper] [torch-fb] |

| WideResNet50 | 224 | 78.018 | 93.934 | 137.6M | 69.0M | 66.9M | 358.1 | [paper] [torch] |

| Inception1 | 224 | 66.840 | 87.676 | 14.0M | 7.0M | 6.0M | 165.1 | [paper] [tf-slim] [caffe-zoo] |

| Inception2 | 224 | 74.680 | 92.156 | 22.3M | 11.2M | 10.2M | 134.3 | [paper] [tf-slim] |

| Inception3 | 299 | 77.946 | 93.758 | 47.6M | 23.9M | 21.8M | 314.6 | [paper] [tf-slim] [keras] |

| Inception4 | 299 | 80.120 | 94.978 | 85.2M | 42.7M | 41.2M | 582.1 | [paper] [tf-slim] |

| InceptionResNet2 | 299 | 80.256 | 95.252 | 111.5M | 55.9M | 54.3M | 656.8 | [paper] [tf-slim] |

| NASNetAlarge | 331 | 82.498 | 96.004 | 186.2M | 93.5M | 89.5M | 2081 | [paper] [tf-slim] |

| NASNetAmobile | 224 | 74.366 | 91.854 | 15.3M | 7.7M | 6.7M | 165.8 | [paper] [tf-slim] |

| PNASNetlarge | 331 | 82.634 | 96.050 | 171.8M | 86.2M | 81.9M | 1978 | [paper] [tf-slim] |

| VGG16 | 224 | 71.268 | 90.050 | 276.7M | 138.4M | 14.7M | 348.4 | [paper] [keras] |

| VGG19 | 224 | 71.256 | 89.988 | 287.3M | 143.7M | 20.0M | 399.8 | [paper] [keras] |

| DenseNet121 | 224 | 74.972 | 92.258 | 15.8M | 8.1M | 7.0M | 202.9 | [paper] [torch] |

| DenseNet169 | 224 | 76.176 | 93.176 | 28.0M | 14.3M | 12.6M | 219.1 | [paper] [torch] |

| DenseNet201 | 224 | 77.320 | 93.620 | 39.6M | 20.2M | 18.3M | 272.0 | [paper] [torch] |

| MobileNet25 | 224 | 51.582 | 75.792 | 0.9M | 0.5M | 0.2M | 34.46 | [paper] [tf-slim] |

| MobileNet50 | 224 | 64.292 | 85.624 | 2.6M | 1.3M | 0.8M | 52.46 | [paper] [tf-slim] |

| MobileNet75 | 224 | 68.412 | 88.242 | 5.1M | 2.6M | 1.8M | 70.11 | [paper] [tf-slim] |

| MobileNet100 | 224 | 70.424 | 89.504 | 8.4M | 4.3M | 3.2M | 83.41 | [paper] [tf-slim] |

| MobileNet35v2 | 224 | 60.086 | 82.432 | 3.3M | 1.7M | 0.4M | 57.04 | [paper] [tf-slim] |

| MobileNet50v2 | 224 | 65.194 | 86.062 | 3.9M | 2.0M | 0.7M | 64.35 | [paper] [tf-slim] |

| MobileNet75v2 | 224 | 69.532 | 89.176 | 5.2M | 2.7M | 1.4M | 88.68 | [paper] [tf-slim] |

| MobileNet100v2 | 224 | 71.336 | 90.142 | 6.9M | 3.5M | 2.3M | 93.82 | [paper] [tf-slim] |

| MobileNet130v2 | 224 | 74.680 | 92.122 | 10.7M | 5.4M | 3.8M | 130.4 | [paper] [tf-slim] |

| MobileNet140v2 | 224 | 75.230 | 92.422 | 12.1M | 6.2M | 4.4M | 132.9 | [paper] [tf-slim] |

| 75v3large | 224 | 73.754 | 91.618 | 7.9M | 4.0M | 2.7M | 79.73 | [paper] [tf-slim] |

| 100v3large | 224 | 75.790 | 92.840 | 27.3M | 5.5M | 4.2M | 94.71 | [paper] [tf-slim] |

| 100v3largemini | 224 | 72.706 | 90.930 | 7.8M | 3.9M | 2.7M | 70.57 | [paper] [tf-slim] |

| 75v3small | 224 | 66.138 | 86.534 | 4.1M | 2.1M | 1.0M | 37.78 | [paper] [tf-slim] |

| 100v3small | 224 | 68.318 | 87.942 | 5.1M | 2.6M | 1.5M | 42.00 | [paper] [tf-slim] |

| 100v3smallmini | 224 | 63.440 | 84.646 | 4.1M | 2.1M | 1.0M | 29.65 | [paper] [tf-slim] |

| EfficientNetB0 | 224 | 77.012 | 93.338 | 26.2M | 5.3M | 4.0M | 147.1 | [paper] [tf-tpu] |

| EfficientNetB1 | 240 | 79.040 | 94.284 | 15.4M | 7.9M | 6.6M | 217.3 | [paper] [tf-tpu] |

| EfficientNetB2 | 260 | 80.064 | 94.862 | 18.1M | 9.2M | 7.8M | 296.4 | [paper] [tf-tpu] |

| EfficientNetB3 | 300 | 81.384 | 95.586 | 24.2M | 12.3M | 10.8M | 482.7 | [paper] [tf-tpu] |

| EfficientNetB4 | 380 | 82.588 | 96.094 | 38.4M | 19.5M | 17.7M | 959.5 | [paper] [tf-tpu] |

| EfficientNetB5 | 456 | 83.496 | 96.590 | 60.4M | 30.6M | 28.5M | 1872 | [paper] [tf-tpu] |

| EfficientNetB6 | 528 | 83.772 | 96.762 | 85.5M | 43.3M | 41.0M | 3503 | [paper] [tf-tpu] |

| EfficientNetB7 | 600 | 84.088 | 96.740 | 131.9M | 66.7M | 64.1M | 6149 | [paper] [tf-tpu] |

| SqueezeNet | 224 | 54.434 | 78.040 | 2.5M | 1.2M | 0.7M | 71.43 | [paper] [caffe] |

Object Detection

Object detection models can be coupled with any classification network. The following tables show performance for specific combinations with pre-trained weights.

- mAP: Mean Average Precision.

- Size: Total number of model parameters (in Millions).

- Speed: Inference time in milliseconds for a single image.

- FPS: Frames Per Second (1000 / Speed).

PASCAL VOC2007 test

| Model | mAP | Size | Speed | FPS | References |

|---|---|---|---|---|---|

| YOLOv3VOC (416) | 0.7423 | 62M | 24.09 | 41.51 | [paper] [darknet] [darkflow] |

| YOLOv2VOC (416) | 0.7320 | 51M | 14.75 | 67.80 | [paper] [darknet] [darkflow] |

| TinyYOLOv2VOC (416) | 0.5303 | 16M | 6.534 | 153.0 | [paper] [darknet] [darkflow] |

| FasterRCNN_ZF_VOC | 0.4466 | 59M | 241.4 | 3.325 | [paper] [caffe] [roi-pooling] |

| FasterRCNN_VGG16_VOC | 0.6872 | 137M | 300.7 | 4.143 | [paper] [caffe] [roi-pooling] |

MS COCO val2014

| Model | mAP | Size | Speed | FPS | References |

|---|---|---|---|---|---|

| YOLOv3COCO (608) | 0.6016 | 62M | 60.66 | 16.49 | [paper] [darknet] [darkflow] |

| YOLOv3COCO (416) | 0.6028 | 62M | 40.23 | 24.85 | [paper] [darknet] [darkflow] |

| YOLOv2COCO (608) | 0.5189 | 51M | 45.88 | 21.80 | [paper] [darknet] [darkflow] |

| YOLOv2COCO (416) | 0.4922 | 51M | 21.66 | 46.17 | [paper] [darknet] [darkflow] |